Online communities protected

If you’re in charge of Trust & Safety for a growing online platform, chances are you’re overwhelmed. You need to scale up your moderation for a rapidly increasing volume of content, but you might not have the budget to add more content moderators to your team.

This is a very risky position — a strained Trust & Safety operation leaves the entire platform vulnerable to shutdown, whether it’s from irreversible negative publicity or being deplatformed.

Spectrum Labs’ Guardian solution helps your Trust & Safety team achieve content moderation at scale. Guardian’s contextual AI and automation detects a higher volume of harmful content with greater confidence, and flags your platform's most harmful users. Instead of trying to moderate each message, your team can moderate users, not just messages to ban, mute, or warn users and immediately remove 30%-60% of toxic content on your platform.

Spectrum Labs’ industry-leading Guardian solution helps Trust & Safety teams identify

harmful and healthy user behavior to better manage their online community.

Guardian works across any language and can be scaled to cover more content as the platform grows.

Some behaviors are obvious and easy to recognize, but others depend on context. Spectrum Labs’ Contextual AI analyzes metadata from user activity, profiles, room reputations, and conversation history to accurately identify harmful content and complex behaviors that other solutions miss.

This involves more than keyword detection — Contextual AI looks at a range of metadata and circumstances to find illicit activity like:

Spectrum Labs’ patented multi-language support for Guardian allows platforms to scale globally without weakening their Trust & Safety commitments or content moderation capabilities.

Across every kind of platform, from dating apps to social media to games and marketplaces, a small percentage of bad actors produce a large percentage of toxic content.

Instead of reviewing and moderating each message, Guardian provides the industry's only user-level moderation, allowing Trust & Safety teams to action users. This scales your team's capacity to cover more content by stopping adversarial content at the source.

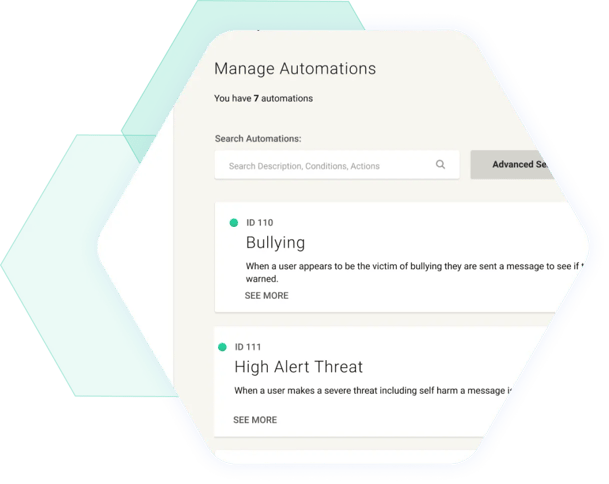

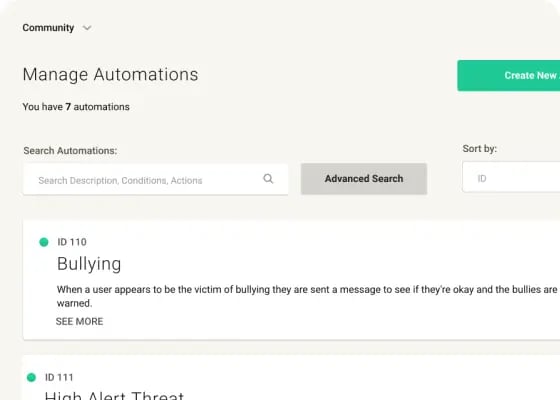

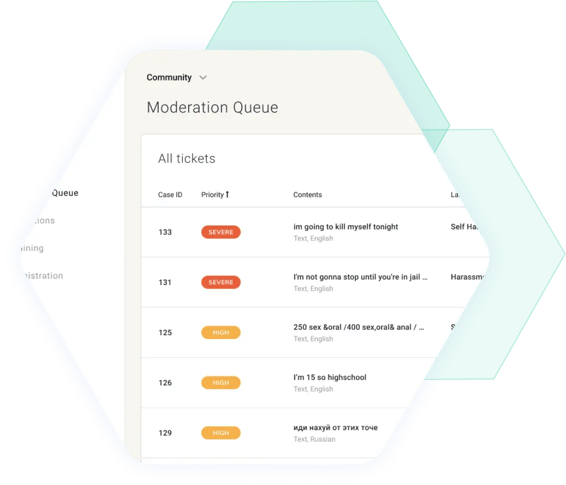

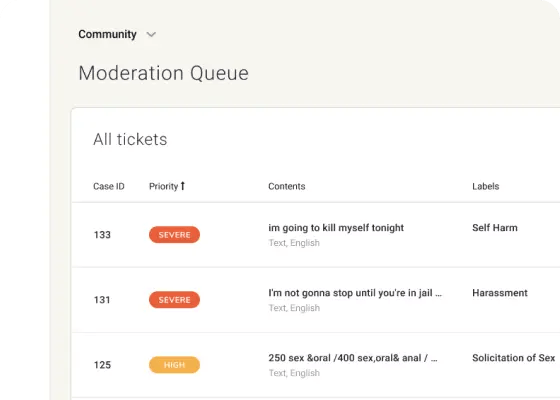

Prioritize cases by severity for moderator decisions. The queue can be reviewed at the user or content level and integrated with your internal systems.

Moderate at scale. Automatically block or remove content, warn or suspend users, and send special cases to the queue for human review.

Gain 360-degree insight, and measure the impact of efforts to minimize toxicity and maximize positive interactions to drive user retention and growth.

If your team prefers to use your own UI, we can help trigger custom actions with configurable webhooks that respond in milliseconds.

Overnight I saw a 50% reduction in manual moderation of display names.

David Brown SVP, Trust and Safety

In Spectrum Labs, we have a partner who is in the trenches with us…we're seeing great results and know our players will experience a significant positive impact on the safety, health, and enjoyment of our games.

Weszt Hart Head of Player Dynamics

Spectrum Labs was extremely easy to integrate. We were up and running in a few days.

Michelle Kennedy CEO and Founder